As technology relentlessly marches forward, Apple’s unveiling of the Vision Pro represents a watershed moment in the evolution of mixed reality interfaces.

The age of cumbersome, hand-held physical controllers appears to be waning.

In its place, we’re presented with a future where the boundary between technology and user becomes increasingly blurred, allowing for an interaction experience that feels as natural as a handshake or a glance.

The Vision Pro heralds an era where augmented and virtual reality is not just about viewing another world, but about immersing yourself within it using our most innate forms of communication: the subtle dance of our hands and the piercing intent of our gaze.

So, how do hand gestures work with the Vision Pro??

How Do Hand Gestures Work with the Vision Pro?

Table of Contents

Vision Pro, offers an unmatched and immersive experience, all orchestrated by the synergy between hand gestures and eye movements. But how exactly does this cutting-edge technology function?

Interplay of Cameras and Sensors: Central to Vision Pro’s design is its high-tech ensemble of cameras and sensors. These components do more than just detect rudimentary movements.

They are fine-tuned to capture a spectrum of motions, from the delicate act of pinching fingers together to the more pronounced sweeps and waves of the hand.

By emulating real-world interactions, Vision Pro ensures that you don’t feel like you’re learning a new language but rather speaking with familiar gestures in a new world.

The Role of Eye Tracking: What truly sets Vision Pro apart is its sophisticated integration of eye-tracking. This is more than just a passive feature; it sets the stage for the gestures.

The eyes act as a pointer, indicating areas of interest or potential interaction within the virtual environment. This action of ‘looking’ becomes your first touch point in the virtual space, making actions like highlighting buttons or summoning menus as intuitive as a simple gaze.

Harmonious Interaction of Gaze and Gesture: The fusion of eye movement with hand gesture is the crux of Vision Pro’s interactivity. Once an item or function is highlighted by your gaze, the subsequent hand gesture then dictates the specific interaction with that item.

For example, after using your eyes to select a digital book in the virtual environment, a pinch gesture might open it, while a swiping motion turns its pages.

Precision and Relaxation Coexist: A standout feature of Vision Pro’s gesture system is its allowance for natural relaxation. The external cameras are so precisely calibrated that a user doesn’t need to maintain a rigid hand posture or position.

Even if your hands are resting casually in your lap, Vision Pro captures the intent and movement accurately, ensuring that immersion is never broken by technological constraints.

How to Use Hand Gestures with Apple’s Vision Pro?

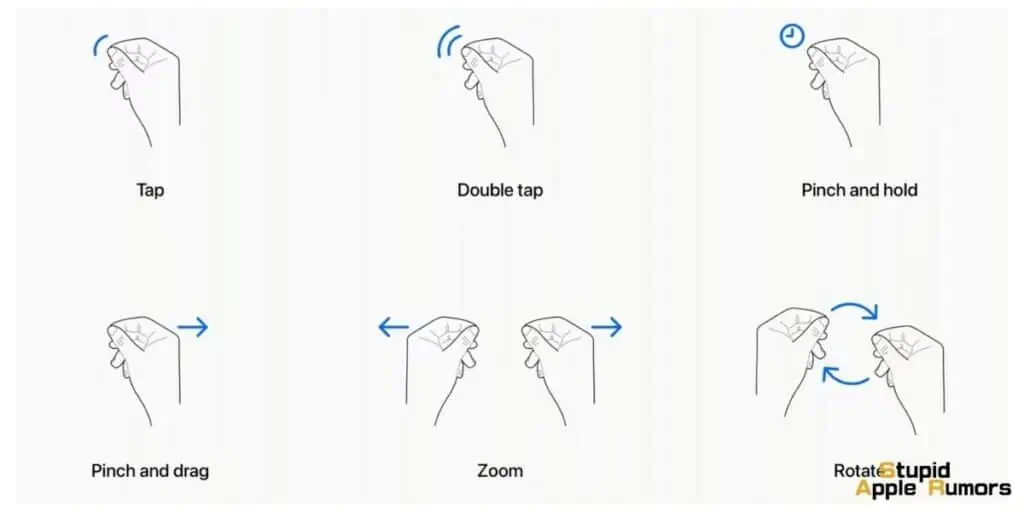

Apple has intricately designed six distinct hand gestures, seamlessly integrated into the Vision Pro’s software:

- Tap: Simply bring your thumb and index finger together, akin to a pinch. This acts much like how you’d tap on your iPhone’s screen, signaling the headset to act upon a virtual object you’re observing.

- Double Tap: This gesture involves the same motion but performed twice in quick succession, mirroring a double-tap action.

- Pinch and Hold: Echoing the tap-and-hold feature on iPhones, this gesture can perform actions such as text highlighting.

- Pinch and Drag: Offering flexibility, this gesture lets you scroll through content or move windows in the virtual realm. Your scrolling speed is directly proportional to the speed of your hand movement.

- Zoom: Engaging both hands, pinching and then pulling your hands apart will zoom in. Conversely, pushing your hands together will zoom out. This gesture is also employed to adjust window sizes, much like dragging window corners.

- Rotate: By pinching your fingers and rotating your hands, you can maneuver virtual objects, altering their orientation in the virtual space.

There are a couple of other hand-gestures that you can use when the Vision Pro launches in 2023;

- Selecting Items: You can simply gaze at a desired item on the display. Confirming the selection? Just tap your fingers together. Such intuitive interactions promise a more immersive experience.

- Scrolling and Navigation: Forget exaggerated hand movements! A swift flick suffices for scrolling through content. Other interactions, such as launching a search or dictating text, involve eyeing the microphone icon and vocalizing your command. Moreover, Apple’s trusty assistant, Siri, remains at your beck and call, enabling you to open apps, shuffle through your music, and execute a range of commands.

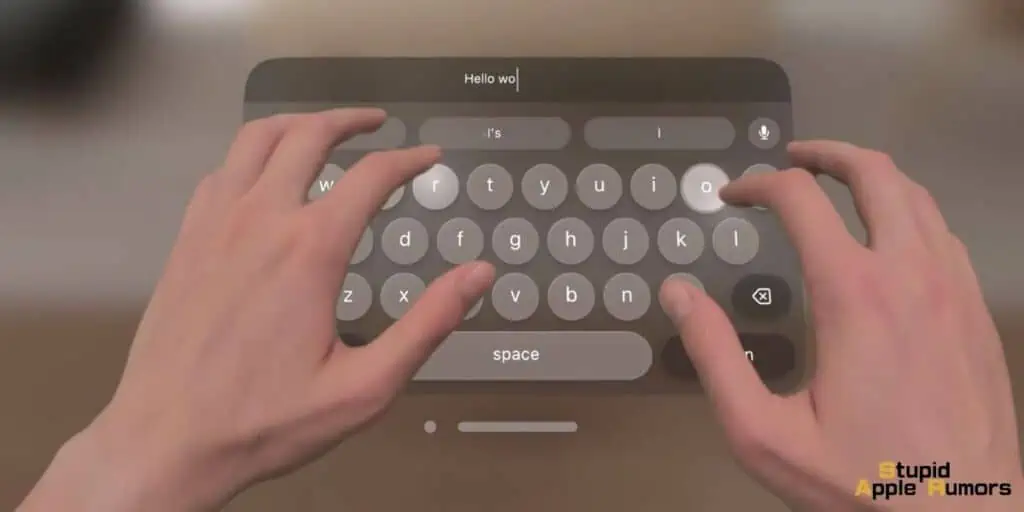

- Typing and Input: While many might prefer the tactile response of a connected iPhone or a Bluetooth keyboard, the Vision Pro offers an innovative alternative. You can type using the visionOS virtual keyboard. If typing isn’t your thing, the dictation feature steps in as a handy alternative.

The Learning Curve

Every revolution comes with its set of challenges. The Vision Pro’s gesture-based interface, while groundbreaking, is a departure from the hand-controlled systems prevalent in most headsets today.

Consequently, you might find yourself grappling with this new method initially. But as with every innovation, an adaptation period is par for the course.

Apple, in its signature style, has ensured that while you experience the new, you aren’t thrown into completely unfamiliar waters.

The app interface, reminiscent of the well-loved iPhone and iPad layouts, offers a sense of comfort. The “Home View” on the Vision Pro mirrors the Home Screen we’ve all come to know, ensuring that while the interaction method is new, the environment isn’t.

How Can You Incorporate Vision Pro’s Gestures into App Development?

While Apple has laid down the foundational gesture language for navigating visionOS’s user interface and augmented reality apps, third-party developers aren’t left behind.

With Apple’s official APIs for visionOS, developers can incorporate and even expand upon the established gesture library.

However, Apple advises against devising custom gestures that conflict with the core system gestures. The emphasis is on creating gestures that are intuitive, easy to remember, and effortless to perform.

What is the Future of Human-Machine Interaction?

While hand gestures and eye tracking in AR and VR might not be new, Apple’s Vision Pro stands out due to its intuitive design. It elevates user experience by making navigation feel not only natural but also enjoyable.

Moreover, the Vision Pro’s ability to work seamlessly with existing devices like Bluetooth keyboards, mice, track pads, and game controllers, ensures that it’s not just a standalone marvel but a comprehensive gateway into the future of human-machine interaction.

In summary, Apple’s Vision Pro, with its state-of-the-art hand gestures and eye-tracking system, is set to redefine the way we perceive and interact with the virtual world.

Rooted in natural human behaviors and enhanced by cutting-edge technology, it promises an AR and VR experience that’s immersive, intuitive, and groundbreaking.

Takeaway

In essence, the Vision Pro’s system is a beautiful blend of cutting-edge technology and human instinct.

It reads and interprets the most subtle of gestures and gazes, weaving them seamlessly into a digital experience that feels as intuitive and natural as interactions in the physical world.

Related